In April 2019, WFD launched a new and innovative activity and outcome monitoring system called the Evidence and Impact Hub (EIH). We discussed how this allowed us to collect better data on our programmes in our last post here.

With any new tool comes the need to change practices. At the American Evaluation Association’s annual conference this year, there was a good handful of sessions dedicated to this problem in some version. Digitising monitoring is obviously something many NGOs struggle with.

WFD has certainly not found a magic solution to this problem, but we can report very good uptake and a large amount of usable data in our 8th month after launching it.

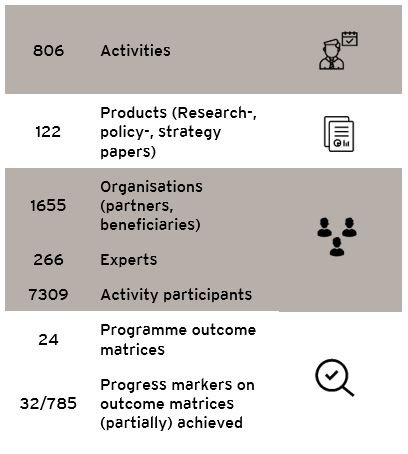

At the time of writing, WFD’s monitoring system had records on the following:

That means that for about 87% of WFD’s programmes, we have a good level of information, allowing us to make decisions. It is almost real-time, and in many ways richer information than we had in narrative reports.

We are using this data to report to senior management, governance bodies and donors, to support internal and external comms, and to manage and gain insights on our programmes.

Here are some practical tips, or aspects that help us achieve these results in terms of uptake:

- Building on existing pain points: most of our staff/users were not very enthusiastic about our existing monitoring processes, and the focus it placed on narrative reporting. We did extensive interviews with our key users (programme staff in country offices), and found that most teams found that they were spending a large amount of time writing reports for both internal and external stakeholders, with very little feedback or other benefits to that work. There was a demand for change at all levels, and we tried our best to make the new system solve those pain points by reducing time required for input as well as facilitate visibility across the organisation.

- Focusing on long-term, user-centric design: we made it clear to our stakeholders when we launched the first version of our monitoring tool in April that this was by no means a finished product. We invited comments in every training and follow-up communication, asking for ideas for making the tool fit our users’ needs better. Many of them took the opportunity and suggested new fields, options in dropdowns, or entire new objects for monitoring – all of them are now well engaged and provide good quality data through the tool, feeling a little bit of ownership of it.

- Taking new software routes – flexibility is everything: Typically, organisations procuring new software tools have the options of either buying and configuring an off-the-shelf tool, or having a custom-built solution made by a software agency. We chose to go a third way: using a drag-and-drop tool for building web apps (examples of these are Knack, Caspio, Zoho Creator), we made our own app. This does not require in-house software developers, and is instead being developed and run by two of our MEL staff. Not having a middle-man or agency has provided us with vital flexibility and speed – as we were co-developing the tool together with our users, making changes to the tool within minutes after meetings was a key success factor. It also meant that the total solution costs us less than 1000£ per year, plus staff time (which is likely not significantly higher than it would be, had we contracted out the building process, due to communication needs).

- Committing to long-term, agile processes: neither building nor supporting users on our new monitoring tool have stopped, or will be finalised in the near future. The tool we launched in April looked very different to the tool today, which we are proud of. At the same time, we recognise that users will take onboard information about how best to use a new system mainly at the point in time when they need to use it for a specific task, so we have tried to align our training with that where possible. While that has cost a considerable amount of staff time, we believe it is worth it, since uptake and resulting data output is much better as a result. Organisations don’t do themselves a favour if they invest large amounts in a software tool, and then try to save on training, adaptation and support, to the degree that the investment does not pay off.

Remaining challenges

- Data quality and comprehensiveness: we quickly realised some fields and pieces of information are easy for staff to fill, and some are consistently left blank or forgotten to be updated. This is largely related to how easy it is for staff to access the relevant information – information that is usually required in narrative reports is easily put onto the system, as the staff already had processes in place for collecting relevant data. However, we have also added information that was not required in conventional reporting, such as the details for each participant at our activities in digital format. This will provide us with unprecedented opportunities for analysis and insight, but staff often have no digital way to collect that information and are then obliged to type up paper records. We are still experimenting with solutions to this problem.

- Duplication of work: while the MEL team was keen to drop all narrative reporting internally, and free up time for more reflective processes, there are still stakeholders that require programmes teams to write down summaries of their activities and results in some format. This mainly applies to external donors, who still ask for narrative reports every three months. We have been addressing that problem by both making technical changes, including more WFD processes on the monitoring tool, and talking to donors to find common ground on monitoring. Any interested stakeholders are warmly invited to join these conversations.

- Use of data and insight: we assumed that making all of this data on programme activities and outcomes available to all WFD stakeholders would have an influence on how we make decisions and manage programmes, almost automatically. That has not happened, which is in large part due to how we communicate data. Whereas country teams have largely embedded their role of inputting data, London teams have not yet fully absorbed the EIH’s ability to extract data.

The MEL team is now starting its steep learning curve number two: now that we have that data, what is the most useful way to present it, and at what times?

Next steps

There is general agreement that the Evidence and Impact Hub is a good initiative, providing useful data. However, making concrete use of the data for specific decisions remains something we need to work on. There is a demand and appreciation for this work, which makes us hopeful that we can report more concrete results soon:

In a survey among programme staff conduced in October 2019, 75% ‘agreed ‘or ‘strongly agreed’ that they could see how the EIH would improve our work at WFD.

“The EIH is giving us more information than we have ever had about WFD’s work – what we do, who we work with, and how we support them. That means that we can now make much better decisions about how our work should evolve, and how we can best respond to demands and challenges from donors, partners and, of course, our own staff.”

Anthony Smith, CEO

“[The EIH] will help to show what programmes/activities are not successful and why. In this sense, the EIH can also help to find solutions for processes/activities to be improved.”

London-based programme staff

This blog post is part of our MEL matters series. MEL matters is a series on our blog about WFD’s pioneering way of monitoring and learning from programmes. As we move towards new digital tools, outcome matrices as a standard, and a combination of real-time tracking and long-term reflecting, we are sharing the lessons we learn here. We hope MEL practitioners, donors and implementing partners can learn from and contribute to our ideas.

Search

Popular search terms:

MEL Matters: Software vs habits

MEL Matters: Software vs habits

Rosie Frost

Sonja Wiencke

In April 2019, WFD launched a new and innovative activity and outcome monitoring system called the Evidence and Impact Hub (EIH). We discussed how this allowed us to collect better data on our programmes in our last post here.

With any new tool comes the need to change practices. At the American Evaluation Association’s annual conference this year, there was a good handful of sessions dedicated to this problem in some version. Digitising monitoring is obviously something many NGOs struggle with.

WFD has certainly not found a magic solution to this problem, but we can report very good uptake and a large amount of usable data in our 8th month after launching it.

At the time of writing, WFD’s monitoring system had records on the following:

That means that for about 87% of WFD’s programmes, we have a good level of information, allowing us to make decisions. It is almost real-time, and in many ways richer information than we had in narrative reports.

We are using this data to report to senior management, governance bodies and donors, to support internal and external comms, and to manage and gain insights on our programmes.

Here are some practical tips, or aspects that help us achieve these results in terms of uptake:

Remaining challenges

The MEL team is now starting its steep learning curve number two: now that we have that data, what is the most useful way to present it, and at what times?

Next steps

There is general agreement that the Evidence and Impact Hub is a good initiative, providing useful data. However, making concrete use of the data for specific decisions remains something we need to work on. There is a demand and appreciation for this work, which makes us hopeful that we can report more concrete results soon:

In a survey among programme staff conduced in October 2019, 75% ‘agreed ‘or ‘strongly agreed’ that they could see how the EIH would improve our work at WFD.

This blog post is part of our MEL matters series. MEL matters is a series on our blog about WFD’s pioneering way of monitoring and learning from programmes. As we move towards new digital tools, outcome matrices as a standard, and a combination of real-time tracking and long-term reflecting, we are sharing the lessons we learn here. We hope MEL practitioners, donors and implementing partners can learn from and contribute to our ideas.